|

We present the Neural Physics Engine (NPE), a framework for learning simulators of intuitive physics that naturally generalize across variable object count and different scene configurations. We propose a factorization of a physical scene into composable object-based representations and a neural network architecture whose compositional structure factorizes object dynamics into pairwise interactions. Like a symbolic physics engine, the NPE is endowed with generic notions of objects and their interactions; realized as a neural network, it can be trained via stochastic gradient descent to adapt to specific object properties and dynamics of different worlds. We evaluate the efficacy of our approach on simple rigid body dynamics in two-dimensional worlds. By comparing to less structured architectures, we show that the NPE's compositional representation of the structure in physical interactions improves its ability to predict movement, generalize across variable object count and different scene configurations, and infer latent properties of objects such as mass. Predictions Below are some predictions from the model:

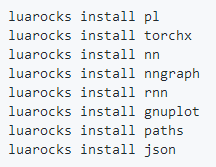

Requirements Torch7() Node.js v6.2.1(https://nodejs.org/en/) DependenciesTo install lua dependencies, run:

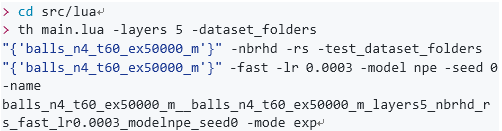

To install js dependencies, run: Instructions NOTE: The code in this repository is still in the process of being cleaned up. Generating Data The code to generate data is adapted from the demo code in matter-js. This is an example of generating 50000 trajectories of 4 balls of variable mass over 60 timesteps. It will create a folderballs_n4_t60_s50000_min the data/folder. This is an example of generating 50000 trajectories of 2 balls over 60 timesteps for wall geometry "U." It will create a folderwalls_n2_t60_s50000_wUin the data/folder. It takes quite a bit of time to generate 50000 trajectories, so 200 trajectories is enough for debugging purposes. In that case you may want to change the flags accordingly in the examples below. Visualization Trajectory data is stored in a .jsonfile. You can visualize the trajectory by opening src/js/demo/render.htmlin your browser and passing in the .jsonfile. Training the Model This is an example of training the model for the balls_n4_t60_s50000_mdataset. The model checkpoints are saved in: src/lua/logs/balls_n4_t60_ex50000_m__balls_n4_t60_ex50000_m_layers5_nbrhd_rs_fast_lr0.0003_modelnpe_seed0. If you are comfortable looking at code that has not been cleaned up yet, please check out the flags in src/lua/main.lua.

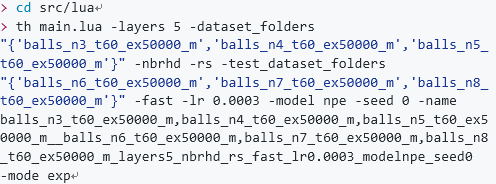

Here is an example of training on 3, 4, 5 balls of variable mass and testing on 6, 7, 8 balls of variable mass, provided that those datasets have been generated. The model checkpoints are saved in : src/lua/logs/balls_n3_t60_ex50000_m,balls_n4_t60_ex50000_m,balls_n5_t60_ex50000_m__balls_n6_t60_ex50000_m,balls_n7_t60_ex50000_m,balls_n8_t60_ex50000_m_layers5_nbrhd_rs_fast_lr0.0003_modelnpe_seed0.

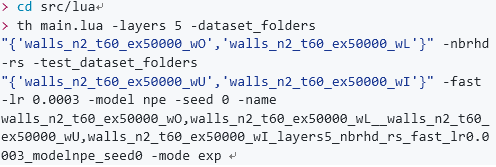

Here is an example of training on "O" and "I" wall geometries and testing on "U" and "I" wall geometries, provided that those datasets have been generated. The model checkpoints are saved in: src/lua/logs/walls_n2_t60_ex50000_wO,walls_n2_t60_ex50000_wL__walls_n2_t60_ex50000_wU,walls_n2_t60_ex50000_wI_layers5_nbrhd_rs_fast_lr0.0003_modelnpe_seed0.

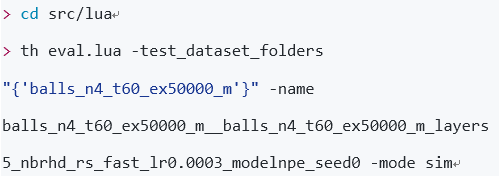

Be sure to look at the command line flags in main.luafor more details. You may want to change the number of training iterations if you are just debugging . The code defaults to cpu, but you can switch to gpu with the -cudaflag. Prediction This is an example of running simulations using trained model that was saved in: src/lua/logs/balls_n4_t60_ex50000_m__balls_n4_t60_ex50000_m_layers5_nbrhd_rs_fast_lr0.0003_modelnpe_seed0.

This is an example of running simulations using trained model that was saved in: (责任编辑:本港台直播) |